1. Introduction

Medical physics can be one of the most challenging and rewarding applications of physics in society. Today, the diagnosis of a patient is rarely done without the use of imaging technology. Imaging allows us to peek inside the body, without resorting to invasive methods. Not only more comfortable and safe to the patient, imaging allows views of anatomy and physiology that cannot be obtained by any other means. Since the discovery of X-rays by Roentgen just before the dawn of the 20th century, the last 100 years have seen a veritable boom in medical imaging methods and technology. The original, time-honoured X-ray is one of the many methods in a radiologist's arsenal of imaging modalities. The physician can now examine the patient in a manner beyond imagination only 50 years ago.

The mathematical aspects of reconstruction techniques were first explored by the Austrian mathematician J Radon in 1917 who developed a general equation for the reconstruction of images from projections, also called the Radon transform. In 1922, several radiologists working independently devised the method for moving the tube over a patient, while the film beneath the patient was moved in the opposite direction. This method blurred all but one sharply focused plane of the target. This linear tomographic X-ray technique would remain the traditional method of obtaining three-dimensional information until the advent of Computed Tomography (CT). Hounsfield's invention of the CT scanner revealed to the public in 1972 was a major breakthrough in biomedical imaging. The result of X-ray CT is a two-dimensional map of the linear attenuation coefficient values (at some effective photon energy).

The first nuclear medicine imaging devices were developed in the late 1940s and early 1950s, where Geiger-Muller counters were used to measure counting rates from iodine-131 in the thyroid gland from point to point over the neck, and the numbers were written down in an equivalent array of points. The numbers alone were a poor display but lines of equal counting rate (isocount lines) were drawn which showed shape, size and relative uptakes of the two lobes and space-occupying lesions. The introduction of the Anger gamma camera in 1957 was the next dramatic change in imaging practice. Notwithstanding the low signal-to-noise ratio of the planar scintigrams obtained with a scintillation camera, a skilled and experienced human observer could visually interpret the images. The work of these pioneer radiologists progressed (unaware of Radon's publication) until 1961 when Oldendorf developed a crude apparatus for gamma ray transmission imaging with an 131I source. In 1963, Kuhl and Edwards developed an emission tomography system, and in 1966, a transmission system using _211Am with oscilloscope camera systems for data storage. In 1967, Anger implemented the concept of rotating gamma camera by rotating the patient at pre-defined angles in front of the detector. The image yields information about a location and concentration of a photon emitting radioisotope, which is administered to a patient. This technique is refereed as Single-Photon Emission Computed Tomography (SPECT).

The history of Positron Emission Tomography (PET) can be traced to the early 1950's, when workers in Boston first realised the medical imaging possibilities of a particular class of radioactive isotopes. Whereas most radioactive isotopes decay by release of gamma rays and electrons, some decay by the release of a positron. A positron can be thought of as a positive electron. Widespread interest and an acceleration in PET technology was stimulated by development of reconstruction algorithms associated with X-ray CT and improvements in nuclear detector technologies. By the mid-1980s, PET had become a tool for medical diagnosis for dynamic studies of human metabolism and for studies of brain activation.

It is believed that after X-ray radiology, the use of ultrasound in medical diagnosis is the second most frequent investigative imaging technique. The earliest attempts to make use of ultrasound date from the late 1930s; ultrasonic imaging based on the pulse-echo principle became possible after the development of fast electronic pulse technology during the second world war. However, it was not until 1958 that the prototype of the first commercial two-dimensional ultrasonic scanner was described by Donald and was used to carry out the first investigations of the pregnant abdomen. Nuclear Magnetic Resonance (NRM) was invented in the mid 1940s; for this invention Bloch and Purcell were awarded a Nobel prize in 1952. Clinical NMR imaging, which burst on the world in 1980, now misguidedly known as MRI, is a form of tomography which results from imaging data obtained in quantitative form. The NMR signal intensity is related to the proton spin population in the imaging voxel which is detected by the NMR process, but it is modified by the amount of spin-lattice relaxation (T1) and the spin-spin relaxation (T2) of the protons which have occurred during the timings of the NMR pulse sequence used.

1.1 Medical imaging techniques

Fig. 1.1. : Transaxial slice of the human brain (top) acquired with different imaging modalities (bottom) from left to right: X-ray CT, MRI, SPECT and PET

Fig. 1.1. : Transaxial slice of the human brain (top) acquired with different imaging modalities (bottom) from left to right: X-ray CT, MRI, SPECT and PET

In general, imaging can address two issues: structure and function. One can either view structures in the body and image anatomy or view chemical processes and image biochemistry. Structural imaging techniques can image anatomy and include ultrasound, X-rays, CT and MRI. One can distinguish bone from soft tissue in X-ray imaging. Organs become delineated in CT and MRI imaging. Biochemical modalities differ from structural ones in that they follow actual chemical substituents and trace their routes through the body. These methods give functional images of blood flow and metabolism essential to diagnoses and to research on the brain, heart, liver, kidneys, bone, and other organs of the human body. Since usually anatomical structures serve different functions and embody different biochemical processes, to some degree biochemical imaging can give anatomical information. However, the strength of these methods is to distinguish tissue according to metabolism, not structure.

As an example, Fig. 1.1 shows a transaxial slice through the human brain (top row) acquired with different imaging modalities (bottom row) giving different information about the brain function and anatomy. Several clinical applications are associated to each imaging technique.

1.2 Positron Emission Tomography in medical imaging

Positron emission tomography (PET) is a well-established imaging modality which provides physicians with information about the body's chemistry not available through any other procedure. Three-dimensional (3D) PET provides qualitative and quantitative information about the volume distribution of biologically significant radiotracers after injection into the human body. Unlike X-ray CT or MRI, which look at anatomy or body morphology, PET studies metabolic activity or body function. PET has been used primarily in cardiology, neurology, and oncology.

Within the spectrum of medical imaging, sensitivity ranges from the detection of millimolar concentrations in MRI to picomolar concentrations in PET: a 109 difference (Jones 1996). With respect to specificity, the range is from detecting tissue density and water content to a clearly identified molecule labelled with a radioactive form of one of its natural constituent elements. This sensitivity is a prerequisite if studies are to be undertaken of pathways and binding sites which function at less than the micromolar level, and to avoid the pharmacological effects of administering a labelled molecule to study its inherent biodistribution.

The sensitivity of in vivo tracer studies is developed per excellence with PET, which is based on electronic collimation and thereby offering a wide acceptance angle for detecting emitted annihilation photons. Consequently, the sensitivity of PET per disintegration with comparable axial fields of view, is two orders of magnitude greater than that of SPECT cameras. A further sensitivity advantage for detecting low molecular concentration arises in PET when detecting molecules labelled with short-lived radioisotopes. With respect to imaging of molecular pathways and interactions, the specificity of PET arises due to the use of radioisotopes such as 18F and, to a lesser extent, 11C to label biochemical/physiological and pharmaceutical molecules within a radioactive form of one of its natural constituent elements. SPECT's ability to image some chemical processes is hindered by the fact that the isotopes are relatively large atoms and cannot be used to label some compounds due to steric reasons.

There is no doubt that PET has a long-term future in the study of receptors, neurotransmitters, drug binding and general pharmacology. In addition, it clearly still has unique roles in biochemistry/metabolism and physiology. With regards to research, the emphasis is to use PET to gain new information on disease processes, to assess the efficacy and mechanisms of therapy and to help drug development and discovery. Deriving new information on brain disease is the focus of many neuroscience research programmes based on PET, with an ever-increasing interest in the psychiatric area. Disease staging and assessment of therapeutic mechanism and efficacy are emerging not only for the brain but also in cardiology and oncology.

1.3 The aim of this work

The aim of this project is to develop a versatile Monte Carlo simulation environment and a reconstruction library taking advantage of modern software development technology and parallel computing technology and to use the simulation package as a development and evaluation tool for scatter correction methods and image reconstruction algorithms in 3D PET. This work was part of the PARAPET (PARAllel PET Scan) project, funded by the European Commission and the Swiss Government, which focused on the development of improved iterative methods for volume reconstruction of PET data through the use of high performance parallel computing technology. This project also includes the development of procedures and software for evaluation of a library of simulated, experimental phantom and clinical data.

The project involves leading players from the UK: the Hammersmith Hospital and Brunel University; Italy: the Ospedale San Rafaele, Milan; Switzerland: Geneva University Hospital; Israel: Israel Institute of Technology, Haifa; the scanner manufacturer ELGEMS, Israel, and the leading European supplier of embedded parallel processing hardware: Parsytec, Germany. The project has a strong European dimension bringing together leading researchers and clinicians and a leading hardware vendor and scanner manufacturer from across the European Union in a collaboration strengthened by the participation of peer organisations in Switzerland and Israel.

In addition to the Monte Carlo simulation package, the project will produce a package of analytical and iterative reconstruction algorithms for 3D PET imaging and make them available for clinical environments. A major aim of the project is to create parallel versions of these algorithms to run on distributed memory multiprocessor machines. The partners in the project use Parsytec CC series of machines, the main goal, however, is to create parallel software tools that achieve:

-

speed-up on the basis of elapsed wall-clock time;

-

scale-up in terms of progressively larger data sets as found on a range of scanners;

-

platform independence, thus running on clusters of high-end PCs and other distributed memory multiprocessor hardware architectures.

The following paragraphs will summarise the contents of this thesis :

-

An object-oriented library for 3D PET reconstruction has been developed (Labbé et al 1999a, Labbé et al 1999b). This library has been designed so that it can be used for many algorithms and scanner geometries. Its flexibility, portability and modular design have helped greatly to develop new iterative algorithms (Ben Tal et al 1999, Jacobson et al 1999) and to compare iterative and analytic methods in different clinical situations, and to parallelise the reconstruction algorithms.

-

The methodological basis for the Monte Carlo method is derived and a critical review of their areas of application in nuclear medical imaging is presented and illustrated with examples of some useful features of such sophisticated tools in connection with common computing facilities and more powerful multiple-processor parallel processing systems (Zaidi 1999a).

-

A Monte Carlo software package, Eidolon, has been developed which accurately simulates volume imaging PET tomographs. The code has been designed to evaluate PET studies of both simple shape-based phantom geometries and more complex non-homogeneous anthropomorphic phantoms (Zaidi et al 1997a, Zaidi et al 1999a). The package is well documented (Zaidi et al 1997b) and has been in the public domain since September 1999.

-

The Eidolon software package was successfully ported on a parallel architecture based on PowerPC 604 processors running under AIX 4.1. A parallelisation paradigm independent of the chosen parallel architecture was used in this work. A linear decrease of the computing time was achieved with the number of computing nodes (Zaidi et al 1998).

-

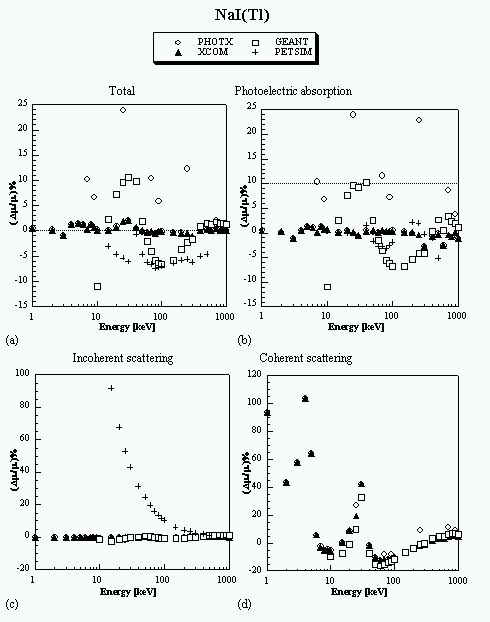

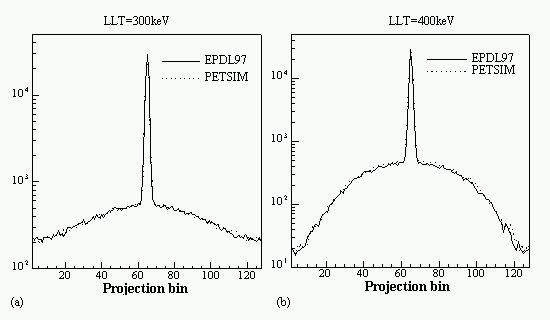

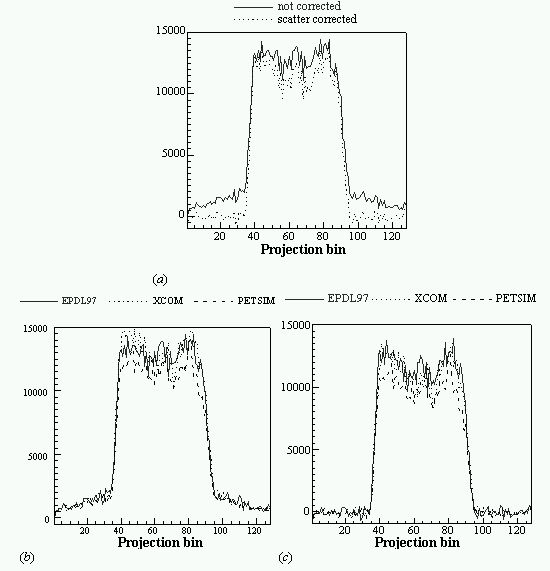

A comparison between different photon cross section libraries and parametrizations implemented in Monte Carlo simulation packages developed for positron emission tomography and the most recent Evaluated Photon Data Library (EPDL97) developed by the Lawrence Livermore National Laboratory (LLNL) in collaboration with NIST was performed for several human tissues and common detector materials for energies from 1 keV to 1 MeV. This latter library is thought to be more accurate and was carefully designed in the form of look-up tables and integrated in our simulation environment significantly improving the efficiency of the Eidolon simulation package (Zaidi et al 1999c, Zaidi 1999d).

-

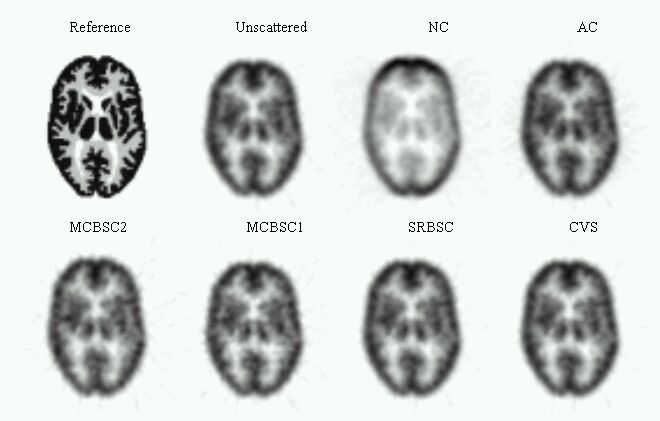

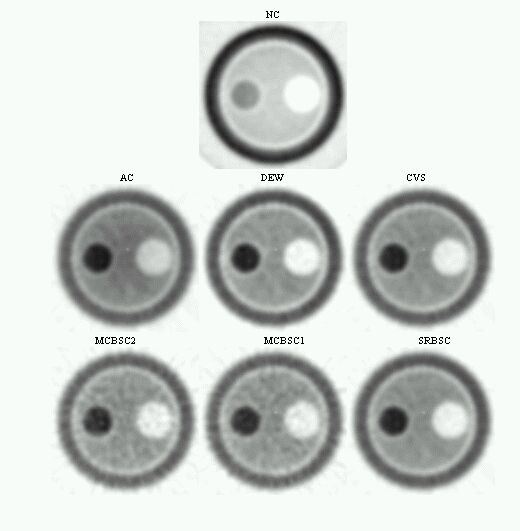

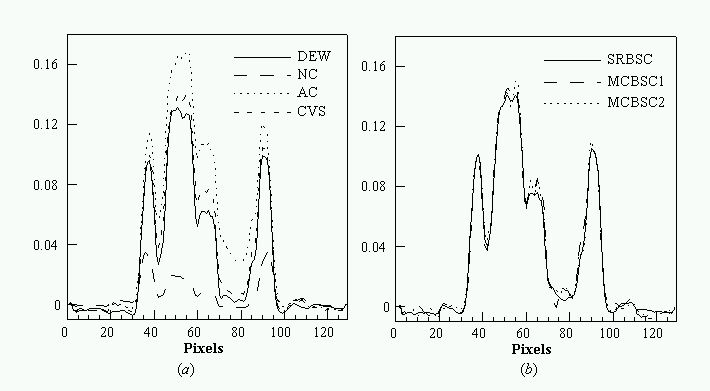

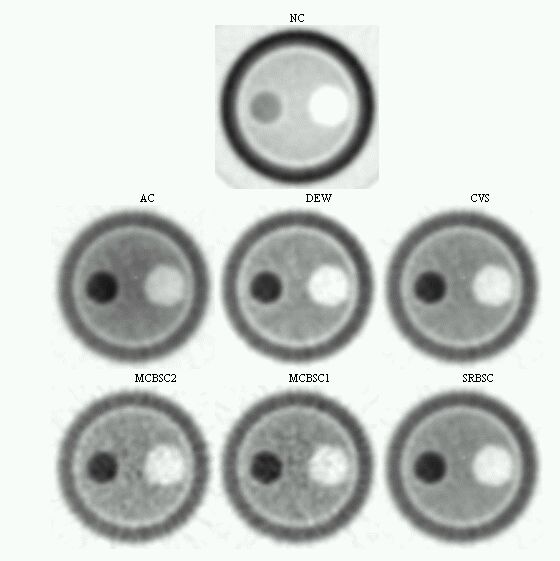

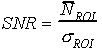

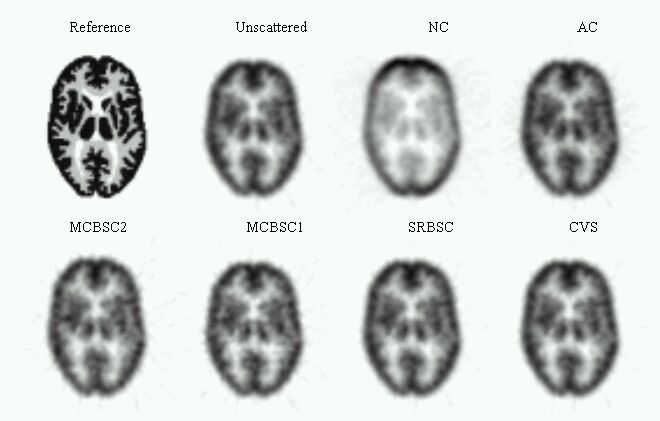

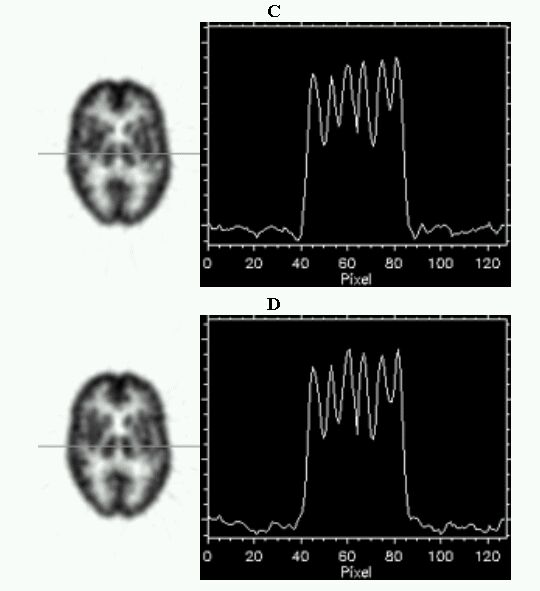

An evaluation of common scatter correction techniques has shown that an accurate modelling of the scatter component in the projection data is necessary to estimate for an accurate scatter correction. An improvement and extension to whole-body 3D imaging of a recently proposed Monte Carlo-based scatter correction method (Levin et al 1995) and a new approach called Statistical Reconstruction Based Scatter Correction (SRBSC) based on estimating the low frequency component corresponding to scatter events using OSEM are proposed in this work (Zaidi 1999e) and evaluated against more common correction methods (Zaidi 1999f).

In chapter 2, the physical principles, basic features and performance parameters of PET are outlined, and some of the practical issues involved in obtaining quantitative data discussed. In chapter 3, the problem of image reconstruction from projections is defined in the context of 2D and 3D PET. Various analytical, rebinning and iterative algorithms proposed for 3D PET reconstruction are then reviewed and some innovative approaches resulting from the PARAPET project and their software implementations presented. As the implementation of 3D type reconstruction algorithms is time-consuming, issues in parallelisation and scalability are also discussed with the aim to reduce the effect of the time factor in practice and make 3D PET more generally accessible and clinically acceptable. In chapter 4, the derivation and methodological basis for the Monte Carlo method is presented and followed by an overview of existing simulation programs and a critical review of their areas of application in PET imaging. In chapter 5, the development, validation and main features of an object-oriented Monte Carlo simulator for 3D PET, Eidolon, is described in detail. A fast implementation of the program on a MIMD parallel architecture and the improvement of its efficiency and accuracy through the implementation of an up-to-date photon cross section library are then presented in Chapter 6. In Chapter 7, issues in scatter modelling and correction techniques in 3D PET are discussed and two new approaches presented. The characteristics and evaluation of these approaches against common scatter correction techniques is also presented using phantom simulations, experimental phantoms and clinical studies. Finally, key conclusions and suggested continuation of this research are presented in chapter 8.

2. Positron Emission Tomography : Physics and Instrumentation

The key advantage of PET is added sensitivity which is obtained by naturally collimating the annihilation photons through a physical process, without the use of absorbing collimators. As does SPECT, PET uses tracers but, these are labelled with positron emitting isotopes, such as Fluorine-18 (18F) and Carbon-11 (11C). PET tracers possess key advantages over SPECT tracers. They have a relatively short half-life which reduces the time of exposure to the patient and thus the absorbed dose. In addition, because 18F is similar in size to hydrogen, it can be used to replace hydrogen in biologically active compounds without altering their function. This opens up an entire group of metabolites which could not be labelled for SPECT. The major drawback is that a short half-life puts a practical upper limit on the activity of the manufactured isotope. A nearby or on-site cyclotron facility is therefore required. Many of the present design objectives of positron imaging tomographs focus on meeting the needs of brain imaging in neurology and of whole-body imaging in oncology. This section describes the physical principles underlying PET and discusses some of the instrinsic advantages that PET exhibits over SPECT imaging.

2.1 The physical principles of PET

In PET, pairs of 511 keV photons arising from electron-positron annihilations are detected in coincidence and allow to measure the concentration of a positron-emitting radionuclide. After intravenous injection of the positron-emitting radiopharmaceutical, patients are generally refereed to waiting rooms to allow its concentration in the tissue or organ of interest. Block detectors surrounding the patient are then used to detect the emerging annihilation photons.

A positron emission tomograph consists a set of detectors usually arranged in adjacent rings that surround the field-of-view (FOV) in order to image the spatial distribution of a positron emitting radiopharmaceutical (Fig. 2.1). After being sorted into parallel projections, the lines of response (LORs) defined by the coincidence channels are used to reconstruct the three-dimensional (3D) distribution of the positron-emitter tracer within the patient. An event is registered if both crystals detect an annihilation photon within a fixed coincidence time window depending on the timing properties of the scintillator (of the order of 10 ns for Bismuth germanate or BGO). A pair of detectors is sensitive only to events occurring in the tube of response joining the two detectors, thereby registering direction information (electronic collimation). There are many advantages associated to coincidence detection compared to single-photon detection devices: electronic collimation eliminates the need for physical collimation, thereby significantly increasing sensitivity and improving the spatial resolution. Accurate corrections for the background and physical effects are, however, essential to allow absolute measurements of tissue tracer concentration to be made.

Fig. 2.1. : Originating from the decay of the radionuclide, a positron travels a few millimetres before it annihilates with a nearby atomic electron, producing two 511 keV photons emitted in opposite directions

Fig. 2.1. : Originating from the decay of the radionuclide, a positron travels a few millimetres before it annihilates with a nearby atomic electron, producing two 511 keV photons emitted in opposite directions

A positron emission tomograph consists a set of detectors usually arranged in adjacent rings surrounding the FOV. Pairs of annihilation photons are detected in coincidence. The size of the transaxial FOV is defined by the number of opposite detectors in coincidence.

2.1.1 Positron emission and annihilation

Proton-rich isotopes may decay via positron emission, where a proton in the nucleus decays to a neutron, a positron and a neutrino. The daughter isotope has an atomic number one less than the parent. Examples of isotopes which undergo decay via positron emission are shown in table 2.1.

As positrons travel through human tissues, they give up their kinetic energy mainly by Coulomb interactions with electrons. As the rest mass of the positron is the same as that of the electron, positrons may undergo large deviations in direction with each Coulomb interaction, and they follow a tortuous path through the tissue as they give up their kinetic energy (Fig. 2.2).

| Table. 2.1. : Physical characteristics and production method of commonly used positron emitting radioiosotopes |

| Radioisotope |

Half-life (min) |

Maximum positron energy (MeV) |

Positron range in water (FWHM in mm) |

Production method |

| 11C |

20.3 |

0.96 |

0.28 |

cyclotron |

| 13N |

9.97 |

1.19 |

0.35 |

cyclotron |

| 15O |

2.03 |

1.70 |

1.22 |

cyclotron |

| 18F |

109.8 |

0.64 |

0.22 |

cyclotron |

| 68Ga |

67.8 |

1.89 |

1.35 |

generator |

| 82Rb |

1.26 |

3.35 |

2.60 |

generator |

When positrons reach thermal energies, they start to interact with electrons either by annihilation, producing two 511 keV photons which are anti-parallel in the positron's frame, or by the formation of a hydrogen-like orbiting couple called positronium. In its ground-state, positronium has two forms: ortho-positronium, where the spins of the electron and positron are parallel, and para-positronium, where the spins are anti-parallel. Para-positronium again decays by self-annihilation, generating two anti-parallel 511 keV photons. Ortho-positronium self-annihilates by the emission of three photons. Both forms are susceptible to the "pick-off" process where the positron annihilates with another electron. Free annihilation and the pick-off process are responsible for over 80% of the decay events. Variations in the momentum of the interacting particles involved in free annihilation and pick-off result in an angular uncertainty in the direction of the 511 keV photons of around 4 mrad in the observer's frame (Rickey et al 1992). In a PET tomograph of diameter 100 cm and active transaxial FOV of 60 cm, this results in a positional inaccuracy of 2-3 mm. The finite positron range and the non-collinearity of the annihilation photons give rise to an inherent positional inaccuracy not present on conventional single-photon emission techniques. However, other characteristics of PET which are discussed below more than offset this disadvantage.

Fig. 2.2. : Schematic representation of positron emission and annihilation

Fig. 2.2. : Schematic representation of positron emission and annihilation

2.1.2 Positron emitting radiopharmaceuticals

Nuclear medicine imaging involves studies of the localisation of administered radiopharmaceuticals by detection of the radiation emitted by the radionuclides. The radiopharmaceuticals can be administered to the patient by injection, orally or by inhalation. A radiopharmaceutical consists of a radionuclide with suitable radiation characteristics which is usually 'labelled' in a chemical reaction to a carrier. When labelled to a carrier, the main interest is the physiological and biological behaviour of the carrier and the radionuclide serves only as a tracer. There are several advantages of using radionuclides as tracers for in vivo studies. The physiological behaviour and the metabolism in different organs can be studied rather than the morphological structure. Important objectives such as the detection of lesions can be realised. However, since in most disease processes physiological or metabolic changes precedes changes in anatomy, nuclear medicine has a capability to provide earlier diagnosis.

While PET was originally used as a research tool, in recent years it come to have an increasingly important clinical role. The largest area of clinical use of PET is in oncology where the most widely used tracer is 18F-fluoro-deoxy-glucose (18F-FDG). This radiopharmaceutical is relatively easy to synthesise with a high radiochemical yield. It also follows a similar metabolic pathway to glucose in vivo, except that it is not metabolised to CO2 and water, but remains trapped within tissue. This makes it well suited to use as a glucose uptake tracer. This is of interest in oncology because proliferating cancer cells have a higher than average rate of glucose metabolism. 11C-methionine is also used in oncology, where it acts as a marker for protein synthesis.

PET has applications in cardiology, where 11N-NH3 is used as a tracer for myocardial perfusion. When 13N-NH3 and 18F-FDG scans of the same patient are interpreted together, PET can be used to distinguish between viable and non-viable tissue in poorly perfused areas of the heart. Such information can be extremely valuable in identifying candidates for coronary by-pass surgery. PET has been used in neurology in a wide range of conditions, and in particular in severe focal epilepsy, where it may be used to compliment magnetic resonance imaging. Another reason for the importance of PET lies in the fact that, unlike conventional radiotracer techniques, it offers the possibility of quantitative measurements of biochemical and physiological processes in vivo. This is important in both research and clinical applications. For example, it has been shown that semi-quantitative measurements of FDG uptake in tumours can be useful in the grading of disease (Hoh et al 1997). By modelling the kinetics of tracers in vivo, it is also possible to obtain quantitative values of physiological parameters such as myocardial blood-flow in ml/min/g or FDG uptake in mmol/min/g providing the acquired data is an accurate measure of tracer concentration. Absolute values of myocardial blood flow can be useful in, for example, the identification of triple-vessel coronary artery disease and absolute values of FDG uptake can be useful in studies of cerebral metabolism. Some examples of radiopharmaceuticals used in PET are shown in table 2.2, together with some typical clinical and research applications.

Prospects for the further development of several agents currently being investigated have been improved, and a number of established clinical procedures have developed an increased potential with the added application of the PET modality. These complementary roles of PET all contribute to an increased accuracy in nuclear medicine investigations.

| Table 2.2. : Examples of positron-emitting radiopharmaceuticals used in clinical PET studies with some representative investigations where quantification is of interest |

| Radioisotope |

Tracer compound |

Physiological process |

Clinical application |

| |

methionine |

protein synthesis |

oncology |

| 11C |

flumazenil |

bezodiazepine receptor antagonist |

epilepsy |

| |

raclopride |

D2 receptor agonist |

movement disorders |

| 13N |

ammonia |

blood perfusion |

myocardial perfusion |

| 15O |

carbon dioxide

water |

blood perfusion

blood perfusion |

brain activation studies

brain activation studies |

| |

Fluoro-deoxy-glucose |

glucose metabolism |

cardiology, neurology, oncology |

| 18F |

Fluoride ion

Fluoro-mizonidazole |

bone metabolism

hypoxia |

oncology

oncology - response to therapy |

2.1.3 Interaction of photons with matter

When electromagnetic radiation passes through matter, some photons will be totally absorbed, some will penetrate the matter without interaction and some will be scattered in different directions, with and without loosing energy. Unlike charged particles, a well-collimated beam of photons shows a truly exponential absorption in matter. This is because photons are absorbed or scattered in a single event. That is, those collimated photons which penetrate the absorber have had no interaction, while the ones absorbed have been eliminated from the beam in a single event. For the energies of interest in nuclear medicine imaging, a photon interacts with the surrounding matter by four main interaction processes. The probability for each process depends on the photon energy, E, and the atomic number, Z, of the material.

2.1.3.1 Photoelectric absorption

When a photoelectric interaction occurs, the energy of a photon is completely transferred to an atomic electron. The electron may thus gain sufficient kinetic energy to be ejected from the electron shell. The energy of the incoming photon must, however, be higher than the binding energy for the electron. Because the entire atom participates, the photoelectric process may be visualised as an interaction of the primary photon with the atomic electron cloud in which the entire photon energy E is absorbed and an electron (usually K or L) is ejected from the atom with an energy :

|

(2.1) |

where Te is the binding energy of the ejected electron. A photoelectric interaction results in a vacancy in the atomic electron shell which, however, will be rapidly filled by an electron from one of the outer electron shells. The difference in binding energy between the two electron shells can be emitted either as a characteristic X-ray photon or as one or several Auger electrons. The relative probability for the emission of characteristic X-ray photons is given by the fluorescence yield . Characteristic X-ray emission is more probable for high Z materials.

The cross section for a photoelectric interaction depends strongly on the photon energy and the atomic number of the material and can be described approximately by :

|

(2.2) |

2.1.3.2 Incoherent (Compton) scattering

In this process, the photon interacts with an atomic electron and is scattered through an angle q relative to its incoming direction. Only a fraction of the photon energy is transferred to the electron. After incoherent (or Compton) scattering, the scattered photon energy Es is given by

|

(2.3) |

where mo is the rest mass energy of the electron and c is the speed of light. Equation (2.3) is derived on the assumption that an elastic collision occurs between the photon and the electron, and that the electron is initially free and at rest. It is evident from Eq. (2.3) that the maximum energy transfer takes place when the photon is back-scattered 180° relative to its incoming direction and that the relative energy transfer from the photon to the electron increases for increasing photon energies. The expression (2.3) is plotted in Fig. 2.3 for 511 keV photons and illustrate that rather large angular deviations occur for a relatively small energy loss.

Fig. 2.3. : The residual energy of a 511 keV photon after Compton scattering through a given angle

Fig. 2.3. : The residual energy of a 511 keV photon after Compton scattering through a given angle

The differential cross section per electron for an incoherent scattering for unpolarised photons, derived by Klein and Nishina (1927), can be calculated from

|

(2.4) |

where re is the classical radius of the electron, and is given by

|

(2.5) |

Equation (2.4) has been derived assuming that the interacting electron is free and at rest. The differential cross section per atom can be calculated by multiplying the Klein-Nishina cross section by the number of electrons Z in order to take into account the fact that atomic electrons are energetically bound to the nucleus. The Klein-Nishina cross section per electron should be multiplied by the incoherent scattering function S(x,Z), i.e.

|

(2.6) |

where  is the momentum transfer parameter and l is the photon wavelength. For high-energy photons, the function S(x,Z) approaches the atomic number Z and the cross section will thus approach the Klein-Nishina cross section.

is the momentum transfer parameter and l is the photon wavelength. For high-energy photons, the function S(x,Z) approaches the atomic number Z and the cross section will thus approach the Klein-Nishina cross section.

2.1.3.3 Coherent (Rayleigh) scattering

In coherent (Rayleigh) scattering the photon interacts with the whole atom, in contrast to incoherent scattering in which the photon interacts only with an atomic electron. The transfer of energy to the atom can be neglected due to the large rest mass of the nucleus. Coherent scattering therefore results only in a small change in the direction of the photon since the momentum change is so small.

The differential cross section per atom is given by the classical Thompson cross section per electron multiplied by the square of the atomic form factor F(x,Z)

|

(2.7) |

where q is the polar angle through which the photon is scattered. The form factor will approach the atomic number Z for interactions involving small changes in the direction of the photon and for low photon energies. The form factor decreases with increasing scattering angle. The angular distribution of coherently scattered photons is thus peaked in the forward direction.

2.1.3.4 Pair production

If the energy of the incoming photon is greater than 1.022 MeV (two electron rest masses), there will be a finite probability for the photon to interact with the nucleus and to be converted into an electron-positron pair. The electron and the positron will have a certain kinetic energy and interact by inelastic collisions with the surrounding atoms. The cross section spair is proportional to the square of the atomic number Z. This type of interaction is not important for the photon energies commonly used in nuclear medicine.

|

(2.8) |

2.1.3.5 The linear attenuation coefficient

When a photon passes through matter, any of the four interaction processes described above may occur. The probability for each process will be proportional to the differential cross section per atom. The probability of a photon traversing a given amount of absorber without any kind of interaction is just the product of the probabilities of survival for each particular type of interaction, and is proportional to the total linear attenuation coefficient, which is given by :

|

(2.9) |

This attenuation coefficient is a measure of the number of primary photons which have had interactions. It is to be distinguished sharply from the absorption coefficient, which is always a smaller quantity measuring the energy absorbed by the medium. The mass attenuation coefficient is defined as the linear attenuation coefficient divided by the density r of the absorber. The attenuation of an incoming narrow-beam of photons when passing through a non-homogeneous object can be described by

|

(2.10) |

where F is the photon fluence measured after the attenuator, Fo is the photon fluence measured without the attenuator and d is the thickness of the attenuator. Linear attenuation coefficients are often referred to as "narrow-beam" or "broad-beam" depending on whether they contain scattered transmission photons or not. Fig. 2.4. illustrates this concept with a simple single detector system measuring uncollimated and collimated photon sources. Narrow-beam transmission measurements are ideally required for accurate attenuation correction in PET. This is of course, determined by the geometry of the transmission data acquisition system.

Fig. 2.4. : Schematic illustration of the concept of narrow-beam and broad-beam attenuation for a simple single detector system

Fig. 2.4. : Schematic illustration of the concept of narrow-beam and broad-beam attenuation for a simple single detector system

In the broad-beam case (top), the source is uncollimated and this results in the deviation of some photons back into the acceptance angle of the collimated detector. Due to the acceptance of scattered events, the count rate will be higher in the broad-beam case, the effect of which is to lower the effective linear attenuation coefficient value. In the narrow-beam case (bottom), most scattered photons will not be detected. This is due mainly to the collimation of both the source and the detector. The difference between detected counts with and without the object in place is due to both total attenuation within the object and scattering out of the line of sight of the detector

2.1.4 Types of coincidence events

Coincidence events in PET fall into 4 categories: trues, scattered, randoms and multiples. Fig. 2.5. illustrates the detection of first three of these different types of coincidences.

Fig. 2.5. : Types of detected coincidence events in PET

Fig. 2.5. : Types of detected coincidence events in PET

True coincidences occur when both photons from an annihilation event are registered by detectors in coincidence, neither photon undergoes any form of interaction prior to detection, and no other event is detected within the coincidence time-window.

A scattered coincidence is one in which at least one of the detected photons has undergone at least one Compton scattering event prior to detection. Since the direction of the photon is changed during the Compton scattering process, it is highly likely that the resulting coincidence event will be assigned to the wrong LOR. Scattered coincidences add a background to the true coincidence distribution which changes slowly with position, decreasing contrast and causing the isotope concentrations to be overestimated. They also add statistical noise to the signal. The number of scattered events depends on the volume and attenuation characteristics of the object being imaged, and on the geometry of the tomograph. Basically, we can distinguish single scattered events when one or both annihilation photons undergo one scattering and multiple scattered events where one of the two photons undergo two or more scatterings.

Random (or false) coincidences occur when two uncorrelated photons not arising from the same annihilation event are incident on the detectors within the coincidence time window of the system. The number of random coincidences in a given LOR is closely related to the rate of single events measured by the detectors joined by that LOR and the rate of random coincidences increase roughly with the square of the activity in the FOV. As with scattered events, the number of random coincidences detected also depends on the volume and attenuation characteristics of the object being imaged, and on the geometry of the tomograph. The distribution of random coincidences is fairly uniform across the FOV, and will cause isotope concentrations to be overestimated if not corrected for. Random coincidences also add statistical noise to the data.

A simple expression relating the number of random coincidences assigned to an LOR to the number of single events incident on the relevant detectors can be derived as follows. Let us define t as the coincidence resolving time of the system, such that any events detected with a time difference of less than t are considered to be coincident. Let r1 be the singles rate on detector channel 1. Then in one second, the total time-window during which coincidences will be recorded is 2t r1. If the singles rate on detector channel 2 is r2, then the number of random coincidences R12 assigned to the LOR joining detectors 1 and 2 is given by :

|

(2.11) |

This relation is true provided that the singles rate is much larger than the rate of coincidence events, and that the singles rates are small compared to the reciprocal of the coincidence resolving time t, so that dead-time effects can be ignored.

Multiple coincidences occur when more than two photons are detected in different detectors within the coincidence resolving time. In this situation, it is not possible to determine the LOR to which the event should be assigned, and the event is rejected. Multiple events can also cause event mis-positioning.

2.2 Design configurations of PET tomographs

There has been a significant evolution in PET instrumentation from a single ring of bismuth germanate (BGO) detectors with a spatial resolution of 15 mm (Keller and Lupton 1983), to multiple rings of small BGO crystals resulting in a spatial resolution of about 5-6 mm (Phelps and Cherry 1998). Improvements in spatial resolution have been achieved by the use of smaller crystals and the efficient use of photomultiplier tubes (PMT's) and Anger logic-based position readout.

The most successful tomograph design represents a reasonable compromise between maximising system sensitivity while keeping detector dead-time and corruption from scattered and random coincidences at a reasonable level (Bailey et al 1997a). In particular, this performance has been achieved through the development of multi-ring tomographs equipped with collimators (or septa) between the detector rings. Coincidences are allowed only within a ring or between adjacent rings. The consequence of this is a considerable decrease in the sensitivity of the system, and the less than optimal use of electronic collimation, one of the main advantages of coincidence detection. By removing the septa and allowing acceptance of coincidences between detectors in any two rings of the tomograph, an increase in sensitivity by a factor of 4-5 has been achieved (Dahlbom et al 1989). Septaless tomographs can also be operated more effectively with lower activity levels in the field-of-view. Fig. 2.6 illustrates possible geometric designs of positron imaging systems.

An important consequence of the cost- and performance-conscious environments of health care today is the constant pressure to minimise the cost of PET tomographs by reducing the geometry from a full ring to a dual-headed device, while at the same time there is also pressure to provide the most accurate diagnostic answers through the highest performance possible. The dilemma is that both approaches can lower the cost of health care. Continuous efforts to integrate recent research findings for the design of both geometries of PET scanners have become the goal of both the academic community and nuclear medicine industry. The most important aspect related to the outcome of research performed in the field is the improvement of the cost/performance optimisation of clinical PET systems.

Dedicated full-ring PET tomographs have evolved through at least 4 generations since the design of the first PET scanner in the mid 1970s (Hoffman et al 1976) and are still considered the high-end devices. The better performance of full-ring systems compared to dual-headed systems is due to higher overall system efficiency and count rate capability which provides the statistical realisation of the physical detector resolution and not a higher intrinsic physical detector resolution. Obviously, this has some important design consequences since even if both scanner designs provide the same physical spatial resolution as estimated by a point spread function, the full-ring system will produce higher resolution images in patients as a result of the higher statistics per unit imaging time.

Fig. 2.6. : Illustration of the range of different geometries of positron volume imaging systems

Fig. 2.6. : Illustration of the range of different geometries of positron volume imaging systems

The dual-head coincidence camera and partial ring tomographs require the rotation of the detectors to collect a full 180° set of projection data.

| Table 2.3. : Design specifications of septa-retractable and fully 3D only multi-ring PET tomographs used in our research |

| |

PRT-1 |

ECAT 953B |

ECAT ART |

GE-ADVANCE |

| Detector size (mm) |

5.6 x 6.1 x 30 |

5.6 x 6.1 x 30 |

6.75 x 6.75 x 20 |

4.0 x 8.1 x 30 |

| No. of crystals |

2048 |

6144 |

4224 |

12096 |

| No. of rings |

16 |

16 |

24 |

18 |

| Ring diameter (mm) |

760 |

760 |

824 |

927 |

| Axial FOV (mm) |

104 |

104 |

162 |

152 |

| Max. axial angle |

7.5° |

7.5° |

11.3° |

8.8° |

A modern multi-ring tomograph with septa in place detects only about 0.5% of the annihilation photons emitted from the activity within the tomograph field-of-view. This increases to over 3% when the septa are retracted (Phelps and Cherry 1998). However, even if the detector system is 100% efficient for the detection of annihilation photons, the angular acceptance of modern scanners would record only 4-5% of the total coincidences. The spatial resolution obtained with modern tomographs is currently limited to 5-6 mm in all three directions. The design characteristics of some septa-retractable and fully 3D only PET tomographs used in our research within the PARAPET project are summarised in table 2.3.

To lower the cost of dedicated full-ring systems, Townsend et al (1993) developed a partial-ring PET tomograph since about 60% of the cost of BGO-based full-ring PET tomographs are contained in the detectors along with the associated PMT's and electronic boards. Thus, partial-ring systems are thought to provide a cost/performance compromise. Up to now, the only partial-ring dedicated PET system commercially available is the ECAT ART manufactured by CTI PET systems (Knoxville, TN) (Bailey et al 1997b). Fig. 2.7. illustrates phantom data acquisition with this tomograph. The cost of this fully 3D only device is about 50% with an efficiency of about 1/3 of that of the equivalent full-ring system, the ECAT EXACT. The detector blocks rotate continuously at about 30 rpm to collect sufficient angular data as required by the reconstruction algorithm. A slip ring technology is used to accomplish continuous rotation and data collection. This device has higher efficiency than current generation full-ring PET tomographs operated in 2D mode, but obviously with a higher scatter fraction and some count rate limitations because of the slow decay time of BGO.

Fig. 2.7. : PET scanning using a commercial partial-ring tomograph, the ECAT ART and the three-dimensional Hoffman brain phantom 1

Fig. 2.7. : PET scanning using a commercial partial-ring tomograph, the ECAT ART and the three-dimensional Hoffman brain phantom 1

The two opposing arrays of detectors (not shown) span about the third of the circumference of an equivalent full-ring tomograph, and rotate continuously to acquire coincidence events at all possible azimuthal angles as opposed to the step-and-shoot rotation of the PRT-1 tomograph.

Another design configuration for fully 3D only PET scanners is the hexagonal array where each side of the hexagon is a rectangular sodium iodide (NaI(Tl)) scintillation camera. This system known as the PENN-PET provides good efficiency and image quality, but is limited by count rate. It was originally developed by Karp et al (1990) and is actually manufactured by UGM Medical Systems Inc. and commercially available from General Electric (Waukesha, WI) as the QUEST. The cost of this device is about 60% that of a full-ring BGO system because of the lower cost of NaI(Tl) scintillation camera design.

Dual-purpose or hybrid dual-head NaI(Tl) scintillation cameras offers the capability of imaging both single-photon emitting radiopharmaceuticals (with collimators) and coincidence imaging for positron-emitting radiopharmaceuticals (by removing the collimators and adding coincidence electronic circuits). One of the main motivations behind the development of such systems is to achieve good image quality without a significant increase in the cost of existing dual-head gamma cameras as well as the use of a single imaging device for both SPECT and PET. An important design consideration when optimising such systems is that the performance achieved for low energy photons used in SPECT imaging (100 to 200 keV) will hardly be attained for the 511 keV annihilation photons because of the lower stopping power of NaI(Tl) at this energy and its slow decay time which has severe consequences for coincidence detection in front of a high singles count rate.

2.3 Scintillation detectors for PET

The critical component of PET tomographs is the scintillation detector. The scintillation process involves the conversion of photons energy into visible light via interaction with a scintillating material. Photoelectric absorption and Compton scatter generate electrons of differing energy distributions. When scintillation detectors are exposed to a mono-energetic photon beam, the energy measured is not that of the electron generated by the initial interaction, but rather the total energy deposited by the photon in the detector. In small detectors, photons may escape after depositing only part of their energy in the crystal. In practice, the energy distribution is also blurred by the finite energy resolution of the detection system. The energy resolution (in percent) of the system is defined as the ratio of the full-width at half-maximum (FWHM) of the full energy peak and the energy value at the full energy peak.

| Table 2.4. : Characteristics of scintillation crystals under development and currently used in nuclear medicine imaging systems |

| Scintillator |

NaI(Tl) |

BGO |

BaF2 |

LSO |

GSO |

LuAP |

YAP |

| Formula |

NaI(Tl) |

Bi4Ge3O12 |

BaF2 |

Lu2SiO5:Ce |

Gd2SiO5:Ce |

LuAlO3:Ce |

YAlO3:Ce |

| Density (g/cc) |

3.67 |

7.13 |

4.89 |

7.4 |

6.71 |

8.34 |

5.37 |

| Light yield (%) |

100 |

15-20 |

3/20 |

75 |

20-25 |

25-50 |

40 |

| Effective Z |

51 |

75 |

53 |

66 |

60 |

65 |

34 |

| Decay constant (ns) |

230 |

300 |

1/700 |

42 |

30-60 |

18 |

25 |

| Peak wavelength (nm) |

410 |

480 |

195/220 |

420 |

440 |

370 |

370 |

| index of refraction |

1.85 |

2.15 |

1.56 |

1.82 |

1.95 |

1.95 |

1.56 |

| Photofraction (%) */+ |

17.3/7.7 |

41.5/88 |

18.7/78.6 |

32.5/85.9 |

25/82.3 |

30.6/85.1 |

4.5/48.3 |

| Mean free path (cm) */+ |

2.93/0.38 |

1.04/0.08 |

2.19/0.27 |

1.15/0.1 |

1.4/0.16 |

1.05/0.1 |

2.17/0.67 |

| Hygroscopic |

yes |

no |

no |

no |

no |

no |

no |

at 511 keV

at 140 keV |

The first nuclear medical imaging systems (single-photon and positron) were designed with NaI(Tl) scintillation detectors. All commercial imaging devices available nowadays employ scintillation detectors coupled to PMT's, likely in the near future, photodiodes or avalanche photodiodes to convert the light output into electrical signals. Increased light per gamma ray interaction, faster rise and decay times, greater stopping power and improved energy resolution are the desired characteristics of scintillation crystals. Table 2.4 summarises these properties for selected scintillators under development and currently in use. Improvements in these characteristics enable detectors to be divided into smaller elements, thus increasing resolution and minimising dead-time losses.

NaI(Tl) combines a moderately high density and effective atomic number resulting in an excellent stopping power and photofraction for 140 keV photons, but only modest values are obtained for 511 keV. On the other hand, BGO presents a very high density and effective atomic number, and is not hygroscopic. These properties rendered it the preferred scintillator for positron imaging devices. Its major disadvantages are, however, the low light yield and only a moderately fast decay time that limits coincidence timing and count rate performance. Subsequent developments tended mainly to reduce the detector size in order to attain better resolutions. The high cost of PMT's favoured the introduction of the concept of block detector (Casey and Nutt 1986) which characterised the third generation of PET scanners. A crystal block is cut into small detector cells (typically 8 by 8 each element about 6 mm by 6 mm) and read out by four PMT's (Fig. 2.8). Light sharing schemes (known as Anger logic) between the four PMT's are used to identify the crystal of interaction. That is, the position coordinates X and Y are calculated from the outputs A, B, C and D of the four PMT's. This trick reduces the number of PMT's by a factor of up to 16 at the cost of increased detector dead-time due to a much higher count load per crystal. The positioning algorithm is subject to statistical error, the magnitude of which depends on the number of scintillation photons detected by the PMT's. Energy resolution at 511 keV of such BGO detector blocks is decreased from an intrinsic value of about 15% FWHM to around 23% up to 44%, depending on the cell, because of scintillation light losses resulting from the cuts applied to the detector block (Vozza et al 1997). The detector blocks are placed side by side to form a circular array.

The block is optically coupled to four photomultiplier tubes at the back, and the crystal in which photoabsorption occurs is identified by comparing the outputs of the four photomultiplier tubes (Anger logic).

Among new or improved scintillating crystals, the most promising materials appear to be Cerium doped crystals such as LSO:Ce, GSO:Ce, YAP:Ce and LuAP:Ce. These can be grown in good quality with efficient scintillation properties. It is important to point out that the growth of large, good quality LuAP:Ce crystals has never been reported in the literature. Moreover, a re-absorption process seems to influence its scintillation properties. A new PET tomograph based on LSO is being developed by CTI PET systems, which offers significant improvements in coincidence timing and count rate over BGO. These improved characteristics of LSO offer tremendous advantages in fully 3D data acquisition and reconstruction designs of positron imaging systems. The same company is also developing a lower energy detector material, yttrium oxyorthosilicate (YSO), that may be useful for single-photon imaging. Other potential crystals include fast and high effective-Z Ce3+ doped mixed orthoaluminate Lux(RE3+)1-xAP:Ce scintillators (chemical formula Lux(RE3+)1-xAlO3:Ce) for RE3+= Y3+ or Gd3+ ions with higher Cerium content than in crystals previously studied (Chval et al 2000). Among all the crystals studied in this research, Lu0.3Y0.7AP:Ce crystal seems to be the most promising with respect to energy resolution, scintillation decay constant and light yield. Moreover, its thermally stimulated luminescence is very low. Nevertheless, LuxY1-xAP:Ce compounds with x£30% cannot really compete with LSO:Ce or possibly even with pure LuAP:Ce in terms of stopping power. LuxGd1-xAP:Ce crystals with x³60% seem to be more interesting in this perspective. Improvement of the stopping power of the mixed orthoaluminate crystals requires to increase significantly their lutetium content.

2.4 2D and 3D PET data acquisition

Most state-of-the-art full-ring PET scanners can be operated in both 2D and 3D data collection modes. In the 2D mode, PET scanners have septa between detector planes to limit the detection of scattered photons, improve count rate performance and reduce random coincidence events. Fig. 2.9. illustrates the conceptual difference between conventional 2D acquisition and volume data acquisition. In 2D PET, data acquisition was limited to coincidences detected within the same detector or adjacent detector rings: each 2D transverse section of the tracer distribution is reconstructed independently of adjacent sections. With the information obtained from detectors belonging to the same detector ring, images representing the tracer distribution in the planes of the detector rings (direct planes) are obtained. With the information obtained from detectors belonging to adjacent detector rings, we reconstruct images representing the tracer distribution in the planes between the detector rings (cross planes). Hence, a 3D PET scanner consisting of N detector rings gives (2N-1) images representing the tracer distribution in adjacent cross-sections of a patient. Transverse sections of the image volume obtained are then stacked together to allow the spatial distribution of the radiopharmaceutical to be viewed in 3D.

However this limits taking full advantage of electronic collimation that allows to record coincidence events within a cone-beam geometry and not just the fan-beam geometry of the 2D mode. The advantage of the 3D mode is an increase in the coincidence efficiency by about a factor of five. This can be accomplished by removing the inter-plane septa, at the expense of increasing scattered radiation. The septa themselves subtend an appreciable solid angle in the camera FOV, and as a result they have a significant shadowing effect on the detectors, the magnitude of which can be as high as 50%. The 3D mode also limits count rate performance for high activities due to the relatively slow decay time of BGO. In fully 3D PET, data acquisition is no more limited to coincidences detected within each of the detector rings, but oblique LORs formed from coincidences detected between different detector rings are also used to reconstruct the image, although different ways to bin or compress the data are adopted by the scanners' manufacturers. The increase in the number of LORs depends on the number of crystal rings and the degree of rebinning used in 2D mode. Rebinning in 2D mode results in a variation in sensitivity along the axial FOV. In 3D mode, there is a much stronger variation in sensitivity (Fig. 2.10) which peaks in the centre of the axial FOV.

In fully 3D PET, the data are sorted into sets of LORs, where each set is parallel to a particular direction, and is therefore a 2D parallel projection of the 3D tracer distribution. Collected coincidences for each LOR are stored in 2D arrays or sinograms Sij(s,F). In a given sinogram, each row corresponds to LORs for a particular azimuthal angle  ; each such row corresponds to a 1D parallel projection of the tracer distribution at a different coordinate along the scanner axis. The columns correspond to the radial coordinate s, that is, the distance from the scanner axis. Sinograms take their name from the sinusoidal curve described by the set of LORs through an off- centred point within the field-of-view. Some scanner models (e.g. ECAT EXACT3D) allow other modes of acquisition: list-mode, where events are stored individually and chronologically, and projection-mode, where events are histogrammed directly into 2D parallel projections.

; each such row corresponds to a 1D parallel projection of the tracer distribution at a different coordinate along the scanner axis. The columns correspond to the radial coordinate s, that is, the distance from the scanner axis. Sinograms take their name from the sinusoidal curve described by the set of LORs through an off- centred point within the field-of-view. Some scanner models (e.g. ECAT EXACT3D) allow other modes of acquisition: list-mode, where events are stored individually and chronologically, and projection-mode, where events are histogrammed directly into 2D parallel projections.

Fig. 2.9. : Diagram (not to scale) of the axial section of a 16-ring cylindrical PET tomograph operated in 2D mode (top) and 3D mode (bottom)

Fig. 2.9. : Diagram (not to scale) of the axial section of a 16-ring cylindrical PET tomograph operated in 2D mode (top) and 3D mode (bottom)

In the 2D acquisition mode, the rings are separated by annular shielding (septa) and only in-plane and cross-plane LORs are allowed. In the 3D acquisition mode, the septa are retracted and coincidences allowed between any 2 rings

Fig. 2.10. : Simulated relative sensitivity from the number of LORs in 2D and 3D acquisitions as a function of the plane number for a 16-ring PET tomograph

Fig. 2.10. : Simulated relative sensitivity from the number of LORs in 2D and 3D acquisitions as a function of the plane number for a 16-ring PET tomograph

The maximum ring difference is ±3 in 2D mode and ±15 in 3D mode. As can be seen, at the edge of the axial FOV, 2D and 3D mode acquisitions have the same predicted sensitivity but in the centre of the FOV, the sensitivity in 3D mode is significantly higher.

Fig. 2.11 shows the sampling pattern in a sinogram of a single detector ring and the resulting correspondence between sets of parallel LORs and their locations on a sinogram. It turns out that the sampling in the transverse direction is a fan-beam pattern, with equal angles of Ddt/2Rd between the fan LORs. Where Rd is the scanner radius, Ddt is the centre-to-centre spacing of the detector cells in the transverse direction given by  and Nd is the total number of detectors per ring. However, most reconstruction algorithms consider the data as a parallel-beam pattern as illustrated in Fig. 2.11(a), where the ray spacing can be calculated by the following expression :

and Nd is the total number of detectors per ring. However, most reconstruction algorithms consider the data as a parallel-beam pattern as illustrated in Fig. 2.11(a), where the ray spacing can be calculated by the following expression :

|

(2.12) |

The consequence of this is to decrease the ray-spacing with increasing the transverse distance from the centre. In cases where  , the assumption of uniform spacing (Dxr = Ddt) is a reasonable approximation, otherwise the data must be interpolated to uniform spacing along the radial direction. This effect is negligible when the diameter of the FOV is much smaller than - generally half as large as - the diameter of the detector ring. Correction for this effect is generally integrated in the acquisition software and is referred to as arc correction.

, the assumption of uniform spacing (Dxr = Ddt) is a reasonable approximation, otherwise the data must be interpolated to uniform spacing along the radial direction. This effect is negligible when the diameter of the FOV is much smaller than - generally half as large as - the diameter of the detector ring. Correction for this effect is generally integrated in the acquisition software and is referred to as arc correction.

As can be seen from Fig. 2.11(b), adjacent sinogram rows corresponding to adjacent parallel projections, are shifted by half the detector spacing relative to each other. The corresponding interlaced sinogram sampling scheme is shown in Fig. 2.11(c) and allows to recover a given maximum spatial frequency with only half as many sampling points as on a rectangular grid, and is optimal in two dimensions [Natterer 1986]. Nevertheless, the data are rebinned according to the rectangular-grid sampling as shown in Fig. 2.11(d) to satisfy the requirements of common reconstruction algorithms. With all these approximations, extraction of the two-dimensional parallel projections from the sinograms Sij(s,F) can be performed by stacking the rows sharing the same row index (~F) as well as the same ring index difference d = i-j , in ascending order of i .

Fig. 2.11. : (a) Cross-section of a multi-ring cylindrical tomograph showing the transverse sampling pattern and the approximately equi-spaced parallel beam pattern often used in practice; (b) Two sets of parallel LORs are shown which yield two rows in the sinogram (c)

Fig. 2.11. : (a) Cross-section of a multi-ring cylindrical tomograph showing the transverse sampling pattern and the approximately equi-spaced parallel beam pattern often used in practice; (b) Two sets of parallel LORs are shown which yield two rows in the sinogram (c)

The solid lines show the parallel projections for the first angle, the dashed lines for the second angle. Adjacent projections sample the centre of the FOV (s=0) and the corresponding sinogram rows are shifted by half the detector spacing; (d) to satisfy reconstruction requirements, the interlaced sinogram is rebinned into a rectangular grid

2.5 Towards quantitative 3D PET

The physical performance characteristics of PET scanners can be quantified by well known parameters such as uniformity, sensitivity, in-plane and axial spatial resolution, count rate capability and dead-time, and scatter fraction. There is no universal agreement on a protocol for the measurement of these parameters, but the most widely recognised is the set of tests proposed by the National Electrical Manufacturers Association (NEMA) (Karp et al 1991). Simple phantoms such as line sources and 20 cm diameter uniform cylinders can be used for this purpose. The above mentioned parameters reflect the actual performance of the tomograph and can serve as reference to compare performances of different tomograph designs. It goes without saying that comparisons of a single parameter may not be relevant for the assessment of overall scanner performance. Moreover, different trues and randoms rates, and different levels of scatter in 2D and 3D, make it difficult to assess the real advantages of 3D Vs 2D imaging. In order to more realistically assess these advantages, the concept of noise equivalent counts (NEC) has been proposed by Strother et al (1990) and was defined as:

|

(2.13) |

Where T and R are the total (i.e. trues+scatter) and randoms rates, SF is the scatter fraction and f is the fraction of the maximum transaxial FOV subtended by a centred 24 cm diameter field. The factor of two in the denominator results from randoms subtraction using data collected in a delayed coincidence window. NEC can be related to the global signal-to-noise ratio, and has been extensively used to assess the real improvement due to 3D data collection. The principal probity of the NEC concept is that it measures the useful counts being acquired after applying perfect correction techniques for the physical effects. It turns out that doubling the system resolution requires a 16-fold improvement in the number of noise equivalent counts for a low statistics study.

Fig. 2.12. : Noise equivalent count rate (NEC) for the EXACT HR+ multi-ring PET tomograph with retractable septa

Fig. 2.12. : Noise equivalent count rate (NEC) for the EXACT HR+ multi-ring PET tomograph with retractable septa

The measurements have been obtained with a 20 cm diameter uniform cylinder (Bendriem and Townsend 1998)

The measured NEC using a 20 cm diameter uniform cylinder in the EXACT HR+ with septa extended and retracted is shown in Fig. 2.12. It is clearly shown that the maximum is reached by the NEC in 3D at a lower activity concentration than in 2D as expected from the behaviour of the true coincidence rate. Note that the peak NEC is greater in 3D than in 2D for this particular tomograph (Bendriem and Townsend 1998). An important consequence when operating in 3D mode is that the signal-to-noise ratio will be higher in the low activity concentration range. This has interesting implications in clinical studies where reduction of the injected activities and hence the radiation dose is of interest.

PET offers the possibility of quantitative measurements of tracer concentration in vivo. However, there are several issues that must be considered in order to fully realise this potential. In practice, the measured line integrals must be corrected for a number of background and physical effects before reconstruction. These include dead-time correction, detector normalisation, subtraction of random coincidences, and attenuation and scatter corrections. These later effects are more relevant to the work presented in this thesis and are therefore briefly discussed below.

Attenuation correction in PET is now widely accepted by the nuclear medicine community as a vital component for the production of artefact-free, quantitative data. The most accurate attenuation correction techniques are based on measured transmission data acquired before (pre-injection), during (simultaneous), or after (post-injection) the emission scan. Alternative methods to compensate for photon attenuation in reconstructed images use assumed distribution and boundary of attenuation coefficients, segmented transmission images, or consistency conditions criteria.

Fig. 2.13. : Illustration of the main differences between attenuation correction schemes for SPECT and PET on a transmission image of a patient in the thorax region (left)

Fig. 2.13. : Illustration of the main differences between attenuation correction schemes for SPECT and PET on a transmission image of a patient in the thorax region (left)

In SPECT, the most widely used algorithm on commercial systems calculates the attenuation factor e-ma for all projection angles and assigns an average attenuation factor to each point within the object along all rays. The procedure can be repeated iteratively and adapted to non-uniform attenuating media. In PET, the attenuation correction factors are independent of the location of the emission point on the LOR and are therefore given directly by the factor e-m(a+b) for each projection. The corresponding attenuation corrected emission image is also shown (right)

Transmission-based attenuation correction has been traditionally performed in the case of PET whereas it only come more recently in the SPECT area. There are many possible explanations for that, one of them being that PET started mainly as a research tool where there was greater emphasis on accurate quantitative measurements. Fig. 2.13 shows a transmission image (left) and corresponding reconstructed emission image (right) along with the attenuation paths for both single-photon and coincidence detection modes. In PET, correction for attenuation is exact, as the attenuation factor for a given LOR depends on the total distance travelled by both annihilation photons (a+b in Fig. 2.13) and is independent of the emission point along this LOR.

In practice, PET transmission measurements are usually acquired with the septa in place in 2D mode with rotating rod sources. Blank (without the patient in the FOV) and transmission (with the patient in the FOV) scans are acquired and the ratio calculated, generally after some spatial filtering to reduce statistical variations in the data sets. The attenuation correction factor for a given LOR is calculated as the ratio of the blank count rate divided by the transmission count rate. More refined transmission image segmentation and tissue classification tools were also proposed to increase the accuracy of attenuation correction (Xu et al 1996). The attenuation correction matrix is calculated by forward projection at appropriate angles of the resulting processed transmission image.

Fig. 2.14 illustrates examples of some of the transmission scanning geometries for PET (Bailey 1998). Transmission ring sources use the positron-emitting radionuclides 68Ga/68Ge (half lives 68 min and 270.8 days, respectively) which co-exist in transient equilibrium. In this case, acquisition is performed in coincidence mode between the detector close to the location of the annihilation event and the detector in the opposing fan which records the second annihilation photon after it has passed through the patient. This design was modified later on by collapsing the ring sources radially into continuously rotating 'rod' sources. Obviously, the local dead-time on the detector block that is close to the rod source is a major limitation of the performance of this approach. This problem was tackled by windowing the transmission data so that only events collinear with the known location of the rod are accepted. This approach was adopted as a standard by scanner manufacturers for a long time until the recent interest in single-photon sources such as 137Cs (T1/2 = 30.2 yrs, Eg=0.662 MeV). A major advantage of this approach is the higher count rate capability resulting from the decreased detector dead-time and increased object penetration (Yu and Nahmias 1995). A simple mechanism has been devised to produce a "coincidence" event between the detector which records the transmitted single photon and the detector in the opposing fan near the current location of the single-photon source.

The whole body 3D tomograph installed at the PET centre of Geneva University Hospitals, the ECAT ART (Bailey et al 1997b) was recently upgraded to use collimated point sources of 137Cs and is capable to produce high quality scatter-free data in this continuously rotating partial-ring tomograph. There is a dual multi-slit collimator singles transmission source comprising two sets of 12 slits having an aperture ratio of 15:1 and an axial pitch of twice the axial crystal ring pitch. 137Cs has a higher energy photon than the 511 keV from the emission annihilation radiation, and therefore the measured transmission must be scaled to account for the difference. This is relatively straightforward and a simple exponent can be used.

To maintain accurate counting statistics, a relatively wide energy window (e.g. [350-650] keV for the ECAT ART) is used due to the finite energy resolution of the scintillation detectors used in PET scanners - typically 10-30% FWHM at 511 keV. The lower part of this window [350-511] keV will accept photons scattered once through angles as large as 58° or more than once through smaller angles. The upper part [511-650] keV may accept a few number of small-angle scattered photons. Therefore, rejection of scattered photons on the basis of energy discrimination has limited performance. Scatter qualitatively decreases contrast by misplacing events during reconstruction, and quantitatively causes errors in the reconstructed radioactivity concentrations by overestimating the actual activity. The impact of scatter in emission tomography generally depends on the photon energy, tomograph energy resolution, and energy window settings, besides the object shape and the source distribution. Many of these parameters are non-stationary which implies a potential difficulty when developing proper scatter correction techniques. However, correction for scatter remains essential, not only for quantification, but also for lesion detection and image segmentation. For the latter case, if the boundary of an activity region is distorted by scatter events, then the accuracy in the calculated volume will be affected (Zaidi 1996a).

Fig. 2.14. : Diagram illustrating schematic examples of transmission data acquisition geometries for PET

Fig. 2.14. : Diagram illustrating schematic examples of transmission data acquisition geometries for PET

From top left, clockwise, they are: positron-emitting ring source measuring transmission in coincidence mode, rotating rod positron-emitting sources (coincidence mode), rod sources fixed on a rotating partial-ring tomograph (coincidence mode), and finally, rotating single-photon sources (137Cs) where attenuation factors along LORs are calculated according to the location of the photons detected on the opposing side of the ring and the current source position.

As stated in section 2.5, the sensitivity to scattered coincidences is greater in 3D mode than in 2D mode. In 2D PET, the fraction of events scattered is typically less than 15% of the total acquired events. Scatter is increased by two to threefold in 3D PET compared with 2D, and may constitute 50% or more of the total recorded events in whole-body imaging. Therefore accurate scatter correction methods are required. Many schemes have been proposed for scatter correction in 3D PET. These are fully discussed in chapter 7.

2.6 Innovative developments in PET instrumentation

In recent years, there has been an increased interest in using conventional SPECT scintillation cameras for PET imaging, however, the count rate performance is a limiting factor. A sandwich-like construction of two different crystals allows the simultaneous use of gamma and positron-emitting radiopharmaceuticals referred as multiple emission tomography (MET) (Kuikka et al 1998). This may be implemented with solid-state photodiode readouts, which also allows electronically collimated coincidence counting (Fig. 2.15). The resultant images will provide finer imaging resolution (less than 5 mm), better contrast and a ten-fold improvement in coincidence sensitivity when compared to what is currently available. Although the photodiode noise might be a major problem, this can be solved to some extent but with a significant increase in cost.

The performance of a detector block design which would have high resolution and high count rate capabilities in both detection modes was recently evaluated (Dahlbom et al 1998). The high light output of LSO (approximately 5-6 times BGO) allows the construction of a detector block that would have similar intrinsic resolution characteristics at 140 keV as a conventional high resolution BGO block detector at 511 keV. However, the intrinsic radioactivity of LSO prevents the use of this scintillator in single-photon counting mode. YSO is a scintillator with higher light output than LSO but with worse absorption characteristics. YSO and LSO could be combined in a phoswich detector block, where YSO is placed in a front layer and is used for low energy SPECT imaging, and LSO in a second layer is used for PET imaging. Events in the two detector materials can be separated by pulse shape discrimination, since the decay times of the light in YSO and LSO are different (70 and 40 ns, respectively).

Fig. 2.15. : Possible detector design of a multiple emission tomography camera

Fig. 2.15. : Possible detector design of a multiple emission tomography camera

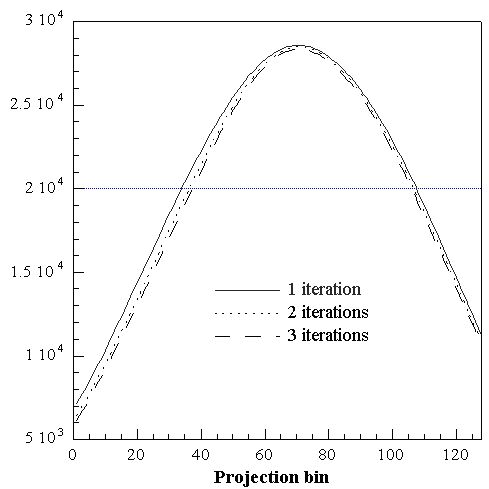

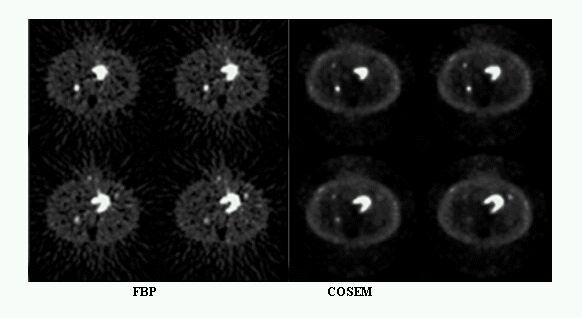

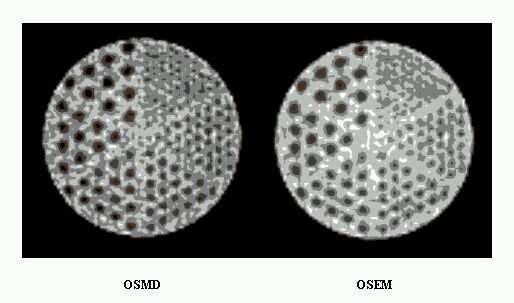

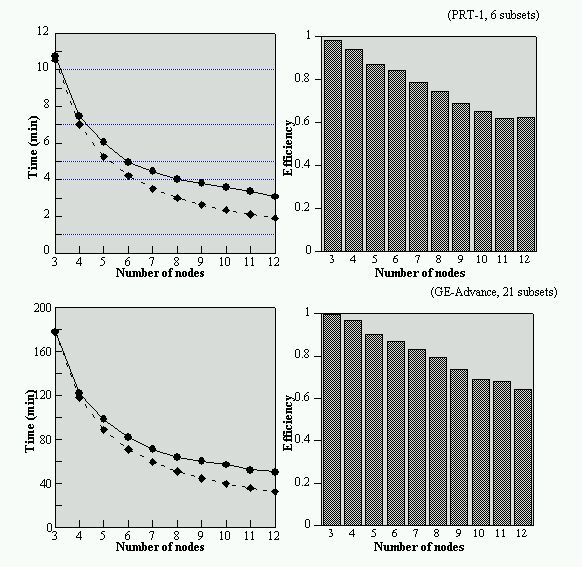

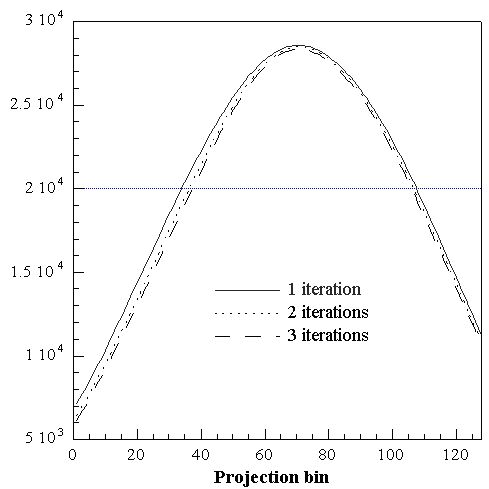

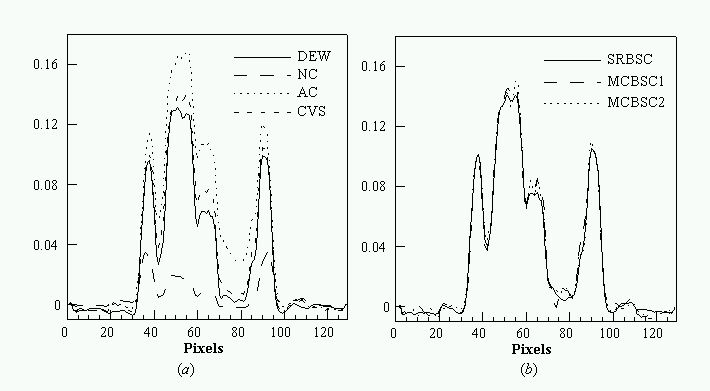

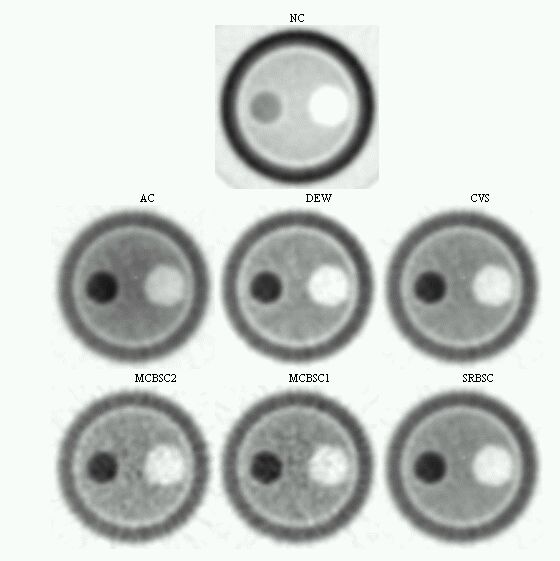

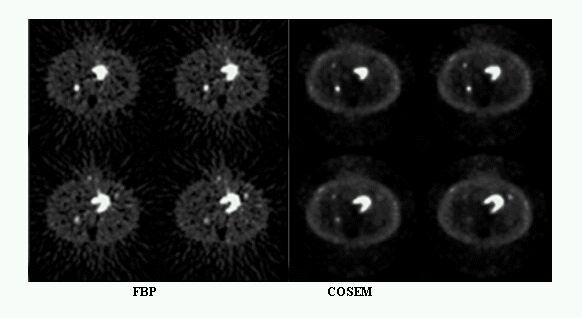

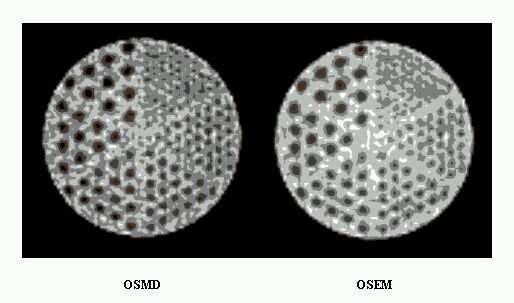

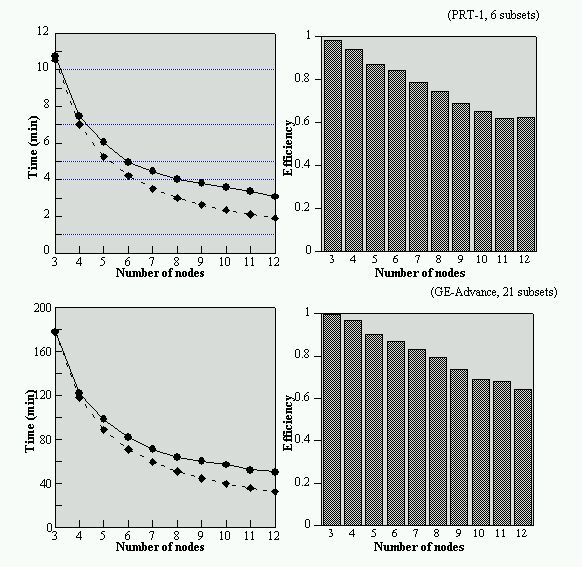

The detector blocks employ two separate crystals, one for single-photon emitters (yttrium oxyorthosilicate, YSO) and one for positron emitters (lutetium oxyorthosilicate, LSO). Rectangular photomultiplier tubes (PMT's) are preferred because they reduce the dead spaces between the PMT's when compared to those of the circular ones