LARA

LARA continues in the form of the C-LARA project.

LARA (Learning and Reading Assistant) is a collaborative open source project, active since mid-2018, whose goal is to develop tools that support conversion of plain texts into an interactive multimedia form designed to support development of L2 language skills by reading. The basic approach is in line with Krashen's influential Theory of Input, suggesting that language learning proceeds most successfully when learners are presented with interesting and comprehensible L2 material in a low-anxiety situation. LARA implements this abstract programme by providing concrete assistance to L2 learners, making texts more comprehensible to help them develop their reading, vocabulary and pronunciation skills. In particular, LARA texts include translations and human-recorded audio attached to words and sentences, and a personalised concordance constructed from the learner's reading history. The learner, just by clicking or hovering on a word, is always in a position to answer three questions: what does it mean, what does it sound like, and where have I seen it before. The figure below shows an example.

The LARA tools are made available through a free portal, divided into two layers. The core LARA engine consists of a suite of Python modules, which can also be run stand-alone from the command-line. These are accessed through a web layer implemented in PHP. The resulting LARA texts can be accessed through the CALLector social network, which was released on Nov 26 2021 and is currently undergoing initial testing. There is comprehensive online documentation.

LARA is being developed in collaboration with an international consortium which includes the University of Iceland, Trinity College Dublin, the University of Adelaide, Ruppin Academic Center, the Ferdowsi University of Mashhad, Flinders University, the University of Gdansk, Tianjin Chengjian University, and several independent scholars including Cathy Chua and Matt Butterweck. Our joint SLaTE 2019 paper gives more details. The LARA content tab has examples of LARA-annotated texts for over a dozen languages.

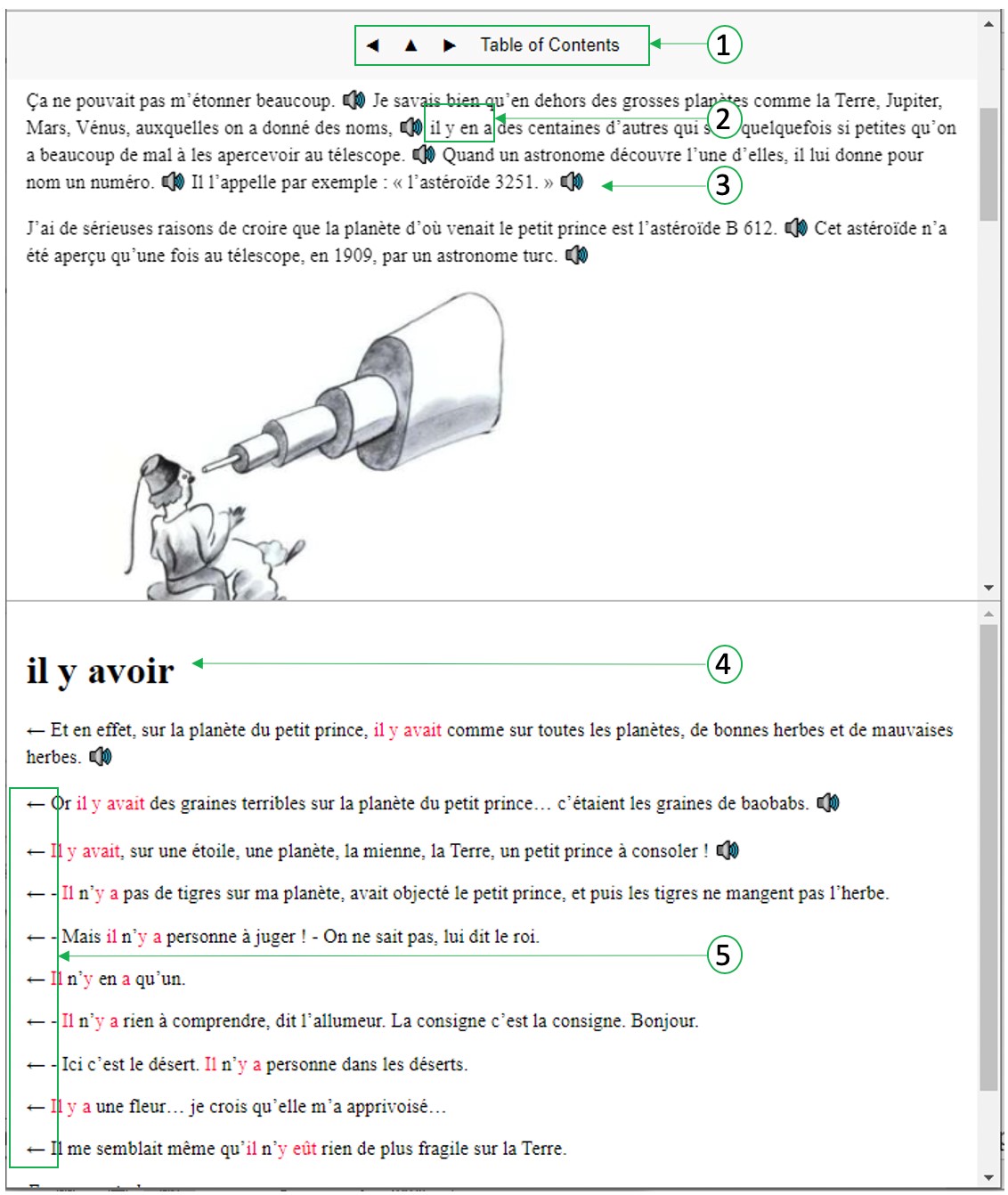

Annotated screenshot from the LARA version of Le petit prince from (Bédi et al 2020). The user navigates using the controls at the top (1). The text is in the upper pane. Clicking on a word displays information about it in the lower pane. Here, the user has just clicked on part of the multiword il y a = "there is" (2), showing an automatically generated concordance (4). Hovering the mouse over a word plays audio and shows a popup translation. Clicking on a loudspeaker plays audio for the preceding sentence (3). The back-arrows (5) link each line in the concordance to its context of occurrence